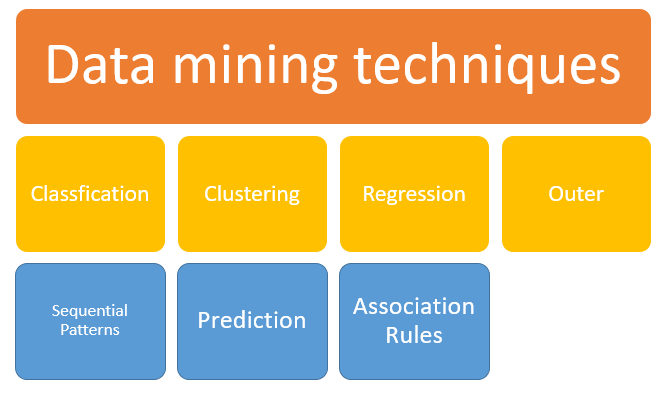

Nowadays, it is very difficult for new data mining beginner to watch free video tutorial of data mining techniques in a single place. Because of that reason, I have created new page in my site to showcase video compilation of practical data mining techniques using known software/tools available in the market today. The compilation videos are categorized into data mining techniques, which are classification; clustering; regression; neural network etc. I will try to update the list every week if new video is available in the web. If you know other sources of video tutorial using different software/tools not listed here then feel free to add in the comment section.

For an Overview of Data Mining Techniques, visit here.

1. Classification

Software: Rapidminer 5.0

This video shows how to import training and prediction data, add a classification learner, and apply the model.

Application: building a Gold Classification trend model.

Software: Rapidminer 5.0

This video shows how to use Rapidminer to create a decision tree to help us find “sweet spots” in a particular market segment. This video tutorial uses the Rapidminer direct mail marketing data generator and a split validation operator to build the decision tree.

Application: creating Decision Trees for Market Segmentation.

Software: STATISTICA

This video shows how to use CHAID decision trees to classify good and bad credit risk. CHAID decision trees are particularly well suited for large data sets and often find application in marketing segmentation. This session discusses the analysis options in STATISTICA and review CHAID output including the decision trees and performance indices.

2. Clustering

Software: STATISTICA

This video shows how to use clustering tools available in STATISTICA Data Miner and demonstrates the K-means clustering tool as well as the Kohonen network clustering tool.

Application: Clustering tools are beneficial when you want to find structure or clusters in data, but dont necessarily have a target variable. Clustering is used often in marketing research applications as well as many others.

Software: WEKA

This video shows how to use clustering algorithm available in WEKA and demonstrates the K-means clustering tool.

Application: building cluster model of bank customer based on mortgage application.

3. Neural Networks (NN)

– Neural Networks is a sophisticated modeling tool, capable of modeling very complex relationships in data.

Software: WEKA

This video shows how to use neural network functions available in WEKA to classify real weather data.

Application: building weather prediction model

4. Regression

This video explores the application of neural networks in a regression problem using STATISITCA Automated Neural Networks. The options used for regression are similar for other neural networks applications such as classification and time series. The episode explores various analysis options and demonstrates working with neural network output.

This video uses the regression data, beverage manufacturing, to explore C&RT as well as the other tree algorithms. The options and parameters are reviewed as well as important output.

5. Evolutionary/Genetic Algorithms

Software: Rapidminer 5.0

This video highlights the data generation capabilities for Rapidminer 5.0 if you want to tinker around, and how to use a Genetic Optimization data pre-processor within a nested nested experiment.

Software: Rapidminer 5.0

This video discusses some of the parameters that are available in the Genetic Algorithm data transformers to select the best attributes in the data set. We also replace the first operator with another Genetic Algorithm data transformer that allows us to manipulate population size, mutation rate, and change the selection schemes (tournament, roulette, etc).

Software: Rapidminer 5.0

This tutorial highlights Rapidminer’s weighting operator using an evolutionary approach. We use financial data to preweight inputs before we feed them into a neural network model to try to better classify a gold trend.

For More Information about Data Minining click here