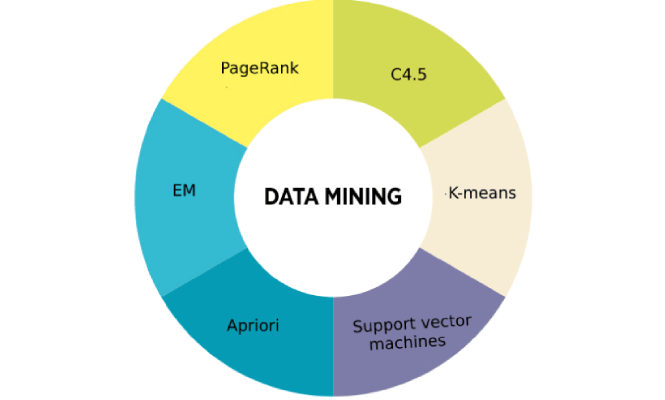

The choice of data mining algorithm is not an easy task. According to the “Data Mining Guide“, if you’re just starting out, it’s probably a good idea to experiment with several techniques to give yourself a feel for how they work. Your choice of algorithm will depend upon:

the data you’ve gathered,

the problem you’re trying to solve,

the computing tools you have available to you.

Let’s take a brief look at four of the more popular algorithms.

1. Regression

Regression is the oldest and most well-known statistical technique that the data mining community utilizes. Basically, regression takes a numerical dataset and develops a mathematical formula that fits the data. When you’re ready to use the results to predict future behavior, you simply take your new data, plug it into the developed formula and you’ve got a prediction! The major limitation of this technique is that it only works well with continuous quantitative data (like weight, speed or age). If you’re working with categorical data where order is not significant (like color, name or gender) you’re better off choosing another technique.

2. Classification

Working with categorical data or a mixture of continuous numeric and categorical data? Classification analysis might suit your needs well. This technique is capable of processing a wider variety of data than regression and is growing in popularity. You’ll also find output that is much easier to interpret. Instead of the complicated mathematical formula given by the regression technique you’ll receive a decision tree that requires a series of binary decisions. One popular classification algorithm is the k-means clustering algorithm. Take a look at the Classification Trees chapter from the Electronic Statistics Textbook for in-depth coverage of this technique.

3. Neural Networks

Neural networks have seen an explosion of interest over the last few years, and are being successfully applied across an extraordinary range of problem domains, in areas as diverse as finance, medicine, engineering, geology and physics. Indeed, anywhere that there are problems of prediction, classification or control, neural networks are being introduced. This sweeping success can be attributed to a few key factors:

Power. Neural networks are very sophisticated modeling techniques capable of modeling extremely complex functions. In particular, neural networks are nonlinear (a term which is discussed in more detail later in this section). For many years linear modeling has been the commonly used technique in most modeling domains since linear models have well-known optimization strategies. Where the linear approximation was not valid (which was frequently the case) the models suffered accordingly. Neural networks also keep in check the curse of dimensionality problem that bedevils attempts to model nonlinear functions with large numbers of variables.

Ease of use. Neural networks learn by example. The neural network user gathers representative data, and then invokestraining algorithms to automatically learn the structure of the data. Although the user does need to have some heuristic knowledge of how to select and prepare data, how to select an appropriate neural network, and how to interpret the results, the level of user knowledge needed to successfully apply neural networks is much lower than would be the case using (for example) some more traditional nonlinear statistical methods.

Neural networks are also intuitively appealing, based as they are on a crude low-level model of biological neural systems. In the future, the development of this neurobiological modeling may lead to genuinely intelligent computers.

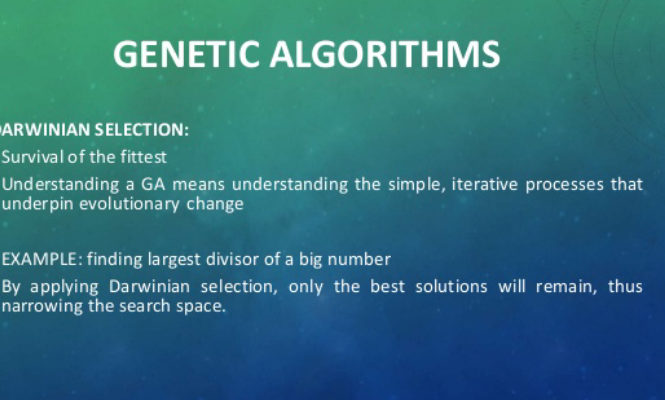

4. Evolutionary Computation

Evolutionary algorithms employ this powerful design philosophy to find solutions to hard problems. Generally speaking, evolutionary techniques can be viewed either as search methods, or as optimization techniques. Evolutionary algorithm (EA) consists of stochastic search that are based on abstractions of the processes of Darwinian evolution. EA maintains a population of “individuals”, each of them a candidate solution to a given problem. Each individual is evaluated by a fitness function, which measures the quality of its corresponding candidate solution. Individuals evolve towards better and better individuals via a selection procedure based on natural selection (survival of the fittest) and operators based on genetics (crossover and mutation). In essence, the crossover operator swaps genetic material between individuals, whereas the mutation operator changes the value of a “gene” (a small part of the genetic material of an individual) to a new random value. Genetic Algorithms (GA) is the most popular paradigm of Evolutionary algorithms.

For More Information about Data Minining click here